1.系统环境

Ubuntu 16.04

vmware

Hadoop 2.7.0

Java 1.8.0_111

master:192.168.19.128

slave1:192.168.19.129

slave2:192.168.19.130

clone 一份 slave2 作为新增的节点 slave3,修改静态 IP 为 192.168.19.131,同时修改 master 、slave1 和 slave2 的 /etc/hosts 文件

127.0.0.1 localhost

127.0.1.1 localhost.localdomain localhost

# The following lines are desirable for IPv6 capable hosts

::1 localhost ip6-localhost ip6-loopback

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

# hadoop nodes

192.168.19.128 master

192.168.19.129 slave1

192.168.19.130 slave2

# 新增节点

192.168.19.131 slave3

2.动态新增节点

由于新增的节点是通过 clone 创建的,所以 hadoop 的基本配置可直接使用。Hadoop 分布式环境搭建可参考:Ubuntu16.04环境搭建 Hadoop 2.7.0 全分布式环境(http://www.linuxdiyf.com/linux/27090.html)

1]、修改 master 的 etc/hadoop/slaves 文件,添加新增的节点 slave3

slave1

slave2

slave3

2]、在新增的 slave3 节点执行命令 ./sbin/hadoop-daemon.sh start datanode 启动 datanode:

hadoop@slave3:~/software/hadoop-2.7.0$ ./sbin/hadoop-daemon.sh start datanode

starting datanode, logging to /home/hadoop/software/hadoop-2.7.0/logs/hadoop-hadoop-datanode-slave3.out

hadoop@slave3:~/software/hadoop-2.7.0$ jps

4696 DataNode

4765 Jps

3]、在新增的 slave3 节点执行命令 ./sbin/yarn-daemon.sh start nodemanager 启动 nodemanager:

hadoop@slave3:~/software/hadoop-2.7.0$ ./sbin/yarn-daemon.sh start nodemanager

starting nodemanager, logging to /home/hadoop/software/hadoop-2.7.0/logs/yarn-hadoop-nodemanager-slave3.out

hadoop@slave3:~/software/hadoop-2.7.0$ jps

4696 DataNode

4795 NodeManager

4846 Jps

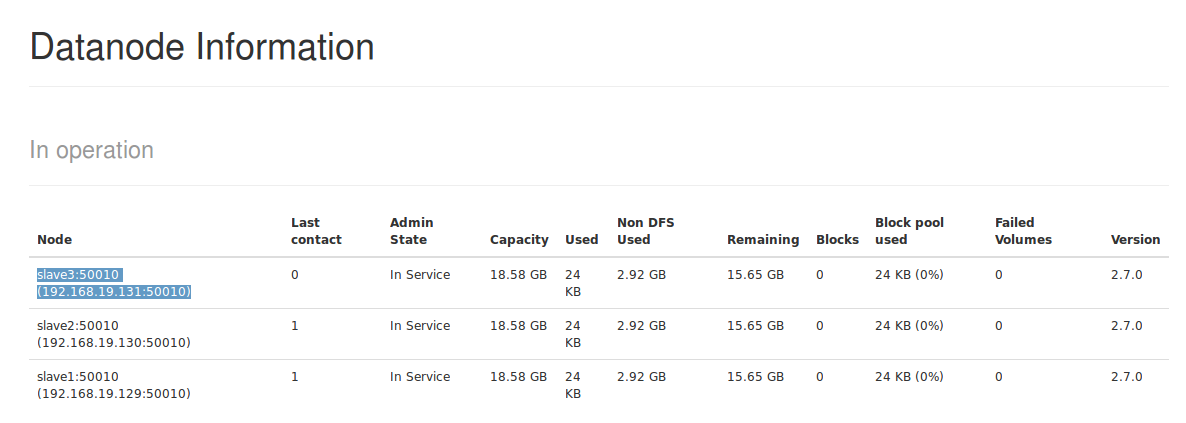

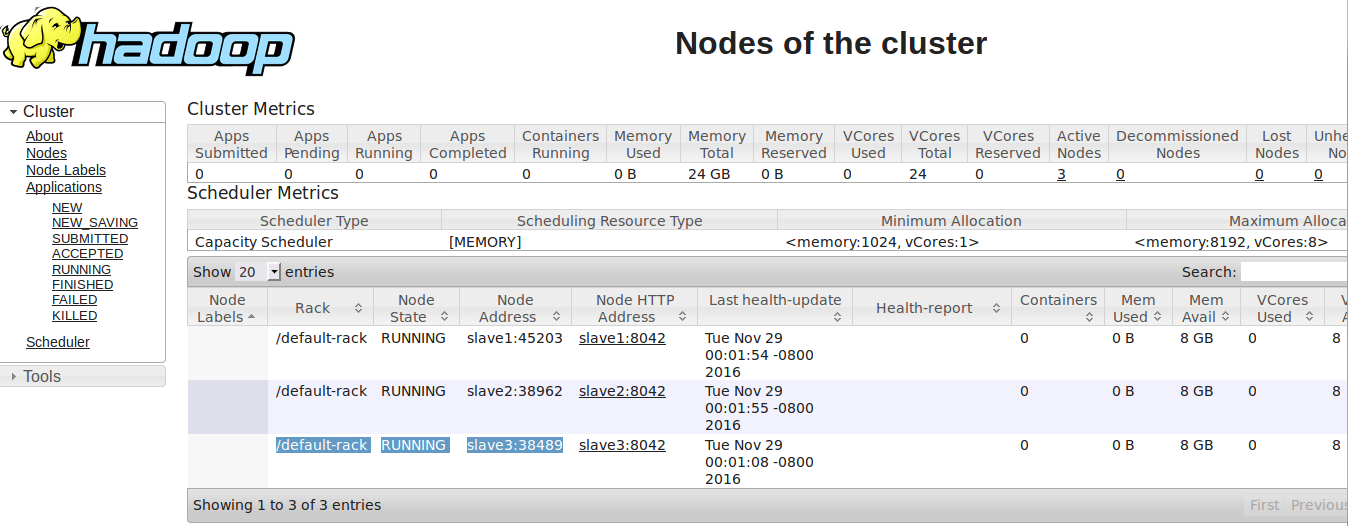

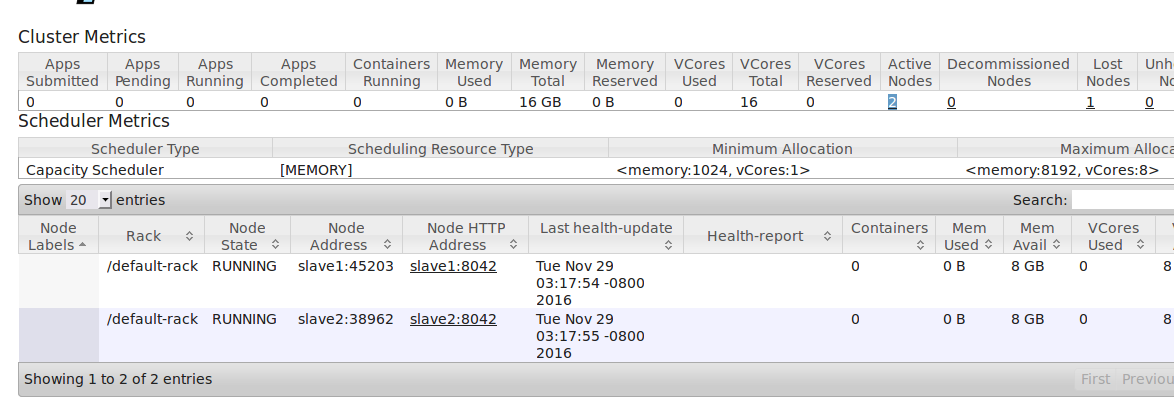

新增的节点 slaves 启动了 DataNode 和 NodeManager,实现了动态向集群添加了节点:

3.动态删除节点

1]、配置启用动态删除节点,在etc/hadoop/ 目录下添加 excludes 文件,配置需要输出的节点:

slave3

2]、修改 etc/hadoop/ hdfs-site.xml :

<property>

<name>dfs.hosts.exclude</name>

<value>/home/hadoop/software/hadoop-2.7.0/etc/hadoop/excludes</value>

</property>

3]、修改 mapred-site.xml:

<property>

<name>mapred.hosts.exclude</name>

<value>/home/hadoop/software/hadoop-2.7.0/etc/hadoop/excludes</value>

<final>true</final>

</property>

4]、namenode 节点上修改文件这些配置文件,执行命令命令 ./bin/hadoop dfsadmin -refreshNodes:

hadoop@master:~/software/hadoop-2.7.0$ ./bin/hadoop dfsadmin -refreshNodes

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.

Refresh nodes successful

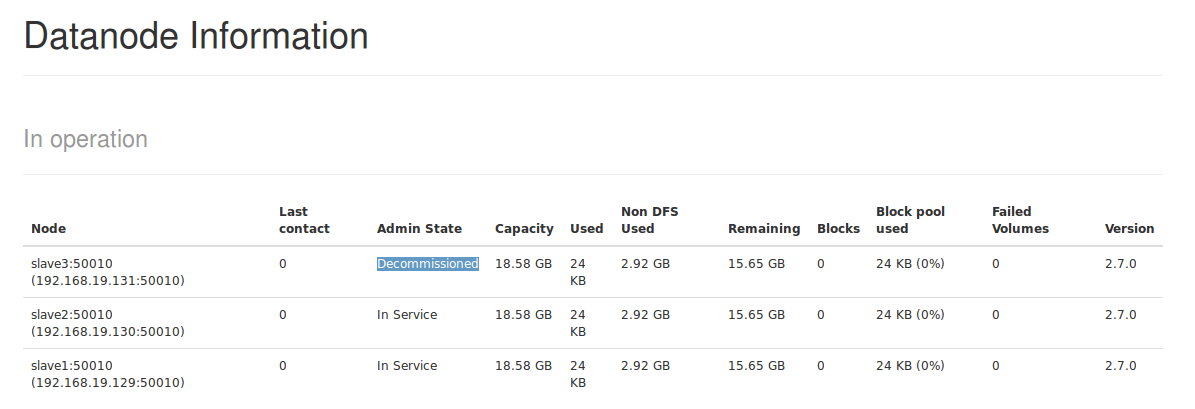

5]、可如果./bin/hadoop dfsadmin -report 或 web 界面查看 slave3 节点状态变由 Normal -> decomissioning -> Decommissioned。

6]、在 slave3 节点上关闭 datanode 和 nodemanager 进程,运行 ./sbin/hadoop-daemon.sh stop datanode 和 ./sbin/yarn-daemon.sh stop nodemanager:

hadoop@slave3:~/software/hadoop-2.7.0$ ./sbin/hadoop-daemon.sh stop datanode

stopping datanode

hadoop@slave3:~/software/hadoop-2.7.0$ jps

5104 Jps

4795 NodeManager

hadoop@slave3:~/software/hadoop-2.7.0$ ./sbin/yarn-daemon.sh stop nodemanager

stopping nodemanager

hadoop@slave3:~/software/hadoop-2.7.0$ jps

5140 Jps

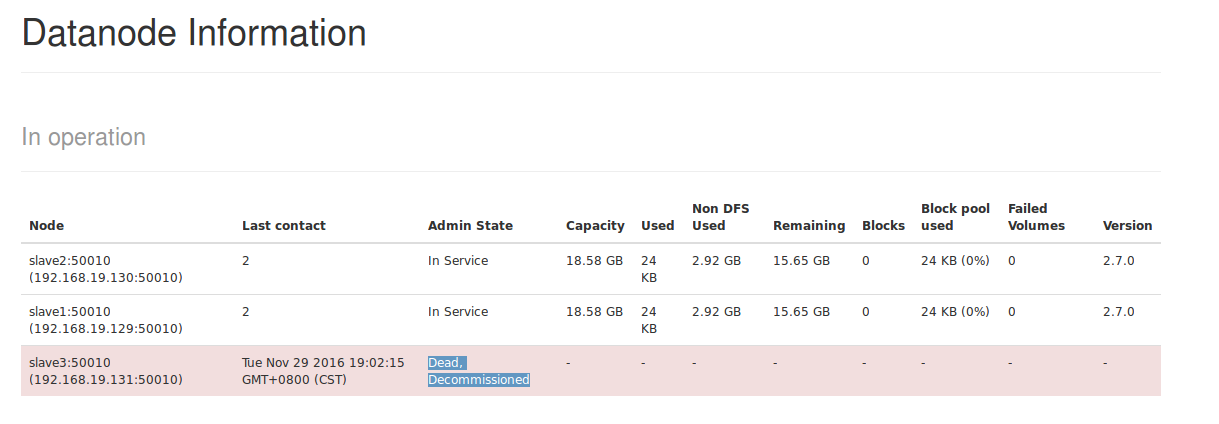

7]、./bin/hadoop dfsadmin -report或 web 界面查看节点状态:

hadoop@master:~/software/hadoop-2.7.0$ ./bin/hadoop dfsadmin -reportDEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.

Configured Capacity: 39891361792 (37.15 GB)

Present Capacity: 33617842176 (31.31 GB)

DFS Remaining: 33617793024 (31.31 GB)

DFS Used: 49152 (48 KB)

DFS Used%: 0.00%

Under replicated blocks: 0

Blocks with corrupt replicas: 0

Missing blocks: 0

Missing blocks (with replication factor 1): 0

-------------------------------------------------

Live datanodes (2):

Name: 192.168.19.130:50010 (slave2)

Hostname: slave2

Decommission Status : Normal

Configured Capacity: 19945680896 (18.58 GB)

DFS Used: 24576 (24 KB)

Non DFS Used: 3136724992 (2.92 GB)

DFS Remaining: 16808931328 (15.65 GB)

DFS Used%: 0.00%

DFS Remaining%: 84.27%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Tue Nov 29 03:19:56 PST 2016

Name: 192.168.19.129:50010 (slave1)

Hostname: slave1

Decommission Status : Normal

Configured Capacity: 19945680896 (18.58 GB)

DFS Used: 24576 (24 KB)

Non DFS Used: 3136794624 (2.92 GB)

DFS Remaining: 16808861696 (15.65 GB)

DFS Used%: 0.00%

DFS Remaining%: 84.27%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Tue Nov 29 03:19:57 PST 2016

Dead datanodes (1):

Name: 192.168.19.131:50010 (slave3)

Hostname: slave3

Decommission Status : Decommissioned

Configured Capacity: 0 (0 B)

DFS Used: 0 (0 B)

Non DFS Used: 0 (0 B)

DFS Remaining: 0 (0 B)

DFS Used%: 100.00%

DFS Remaining%: 0.00%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 0

Last contact: Tue Nov 29 03:02:15 PST 2016

8]、运行./sbin/start-balancer.sh均衡 block

hadoop@master:~/software/hadoop-2.7.0$ ./sbin/start-balancer.sh

starting balancer, logging to /home/hadoop/software/hadoop-2.7.0/logs/hadoop-hadoop-balancer-master.out